Another great re:Invent is in the books! As a container aficionado, one announcement stood out more than most: EKS Auto Mode. I already planned to spend most of my re:Invent time refreshing on EKS best practices for work, but this announcement kicked my curiosity up to 11...

Introduction

The EKS Auto Mode announcement excited me the most at re:Invent 2024. EKS users now have a simpler path to launch a cluster that is already configured with critical features including the Karpenter intelligent scaling solution, the AWS ALB Controller, and others. All of these capabilities are great, but to better appreciate my perspective, I'll briefly review my personal history with EKS.

Wayback Machine for Trevor's Career

When I joined AWS as a Solutions Architect (SA) in 2016, AWS-managed Kubernetes was a very popular (to put it mildly) request from my customers: I once asked a customer if they had any feedback about features/functionality that ECS could provide to improve the customer experience, and their response was simply, "it's not Kubernetes." (Nothing further to discuss at that point 🥴). Fast forward to the official EKS launch in 2017, and the excitement amongst our customers (and the relief amongst SA's who could position an AWS offering 😅) was palpable.

The initial launch was great, but there was room for improvment in the user experience. The EKS team and the community iterated furiously on capabilities like eksctl, courtesy of AWS and Weaveworks (RIP), to simplify cluster deployments and the AWS ALB Controller, courtesy of TicketMaster and CoreOS (now, Red Hat/IBM). Later on, the EKS team released features like managed nodegroups and add-on management to further simplify cluster administration. These were all great, but did not quite hit the requested developer experience of providing a Kubernetes API while AWS abstracts all cluster management away from the customer including upgrades, add-on management, node management, etc...That is, until December 3, 2024 when EKS Auto Mode launched.

Back to the Future, How Do You Deploy an EKS Cluster in Auto Mode?...powered by Pulumi

The EKS blog does a great job of showing how to get started with the console. So, I'll share some Pulumi goodness to automate the process. Along the way, I'll highlight operational improvements such as scaling from and to zero based on workload demands.

Deploy EKS Auto Mode Clusters with Pulumi

You can find my sample code in its entirety on GitHub. I adapted the example code from Pulumi's site to grant Cluster Admin privileges to my user for kubectl commands. In the samples below, I'll highlight notable differences from deploying a standard EKS cluster and my modification:

package main

import (

"encoding/json"

"fmt"

"github.com/pulumi/pulumi-aws/sdk/v6/go/aws/eks"

"github.com/pulumi/pulumi-aws/sdk/v6/go/aws/iam"

"github.com/pulumi/pulumi/sdk/v3/go/pulumi"

)

func main() {

pulumi.Run(func(ctx *pulumi.Context) error {

.

.

.

eksCluster, err := eks.NewCluster(ctx, "blog-cluster", &eks.ClusterArgs{

Name: pulumi.String("blog-cluster"),

AccessConfig: &eks.ClusterAccessConfigArgs{

AuthenticationMode: pulumi.String("API"),

},

RoleArn: clusterRole.Arn,

Version: pulumi.String("1.30"),

ComputeConfig: &eks.ClusterComputeConfigArgs{

Enabled: pulumi.Bool(true),

NodePools: pulumi.StringArray{

pulumi.String("general-purpose"),

},

NodeRoleArn: node.Arn,

},

KubernetesNetworkConfig: &eks.ClusterKubernetesNetworkConfigArgs{

ElasticLoadBalancing: &eks.ClusterKubernetesNetworkConfigElasticLoadBalancingArgs{

Enabled: pulumi.Bool(true),

},

},

StorageConfig: &eks.ClusterStorageConfigArgs{

BlockStorage: &eks.ClusterStorageConfigBlockStorageArgs{

Enabled: pulumi.Bool(true),

},

},

BootstrapSelfManagedAddons: pulumi.Bool(false),

VpcConfig: &eks.ClusterVpcConfigArgs{

EndpointPrivateAccess: pulumi.Bool(true),

EndpointPublicAccess: pulumi.Bool(true),

SubnetIds: pulumi.StringArray(subnetIds),

},

}

.

.

.

}

}

Notice there is no Auto Mode setting. Instead, you enable Auto Mode by the following configurations:

- enabling ComputeConfig, KubernetesNetworkConfig, and StorageConfig

- disabling BootstrapSelfManagedAddons

// Retrive my IAM user info

existingUser, err := iam.LookupUser(ctx, &iam.LookupUserArgs{

UserName: iamUserName,

}, nil)

if err != nil {

return err

}

// Create an AccessEntry to grant the IAM user access to the EKS cluster

_, err = eks.NewAccessEntry(ctx, "eksAccessEntry", &eks.AccessEntryArgs{

ClusterName: eksCluster.Name,

PrincipalArn: pulumi.String(existingUser.Arn),

})

if err != nil {

return err

}

// Create an AccessPolicyAssociation to associate the policy with the EKS cluster

_, err = eks.NewAccessPolicyAssociation(ctx, "eksAccessPolicyAssociation", &eks.AccessPolicyAssociationArgs{

ClusterName: eksCluster.Name,

PrincipalArn: pulumi.String(existingUser.Arn),

PolicyArn: pulumi.String("arn:aws:eks::aws:cluster-access-policy/AmazonEKSClusterAdminPolicy"),

AccessScope: eks.AccessPolicyAssociationAccessScopeArgs{

Type: pulumi.String("cluster"),

},

})

if err != nil {

return err

}

In this section of code, I retrieve my IAM user's ARN, create an access entry for this user in the EKS Cluster using the EKS API, and I update the user's access to be a Cluster Admin to run any kubectl commands as needed.

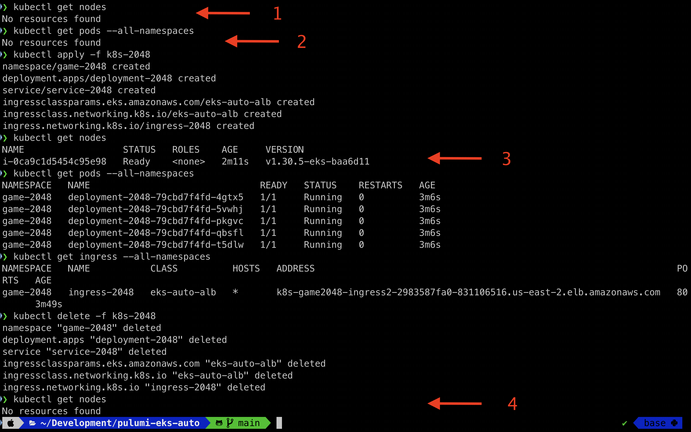

Let's take a look at the deployed cluster and how it behaves using kubectl at the CLI:

In the screenshot, note the following numbered observations:

- After the initial cluster deployment, I have no Kubernetes nodes provisioned.

- I also do not have any pods provisioned: the EKS-managed add-ons are not visible to the user.

- My first node is only provisioned after deploying my first workload.

- My node is automatically terminated when there are no more workloads running! Karpenter helps you optimize your compute for cost.

He Who Controls the EC2 Spice, Controls the Universe

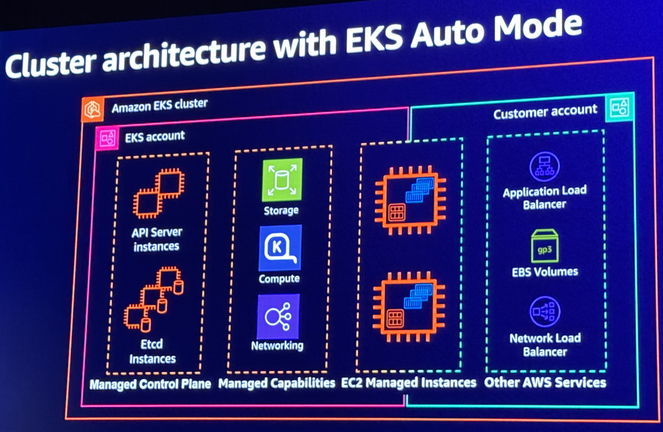

One interesting architectural change with EKS Auto Mode is the management of the EC2 nodes. The EKS service team places the EC2 instances in your AWS account instead of in the EKS account. This allows the service team to support new VPC features sooner than if they had to manage cross-account ENIs...Your next question may be, "How do the EKS service team prevent accidental deletions of the EKS nodes?!"

The EKS team worked with the IAM and EC2 service teams to develop a unique management capability that prevents users from modifying the EC2 nodes managed by EKS Auto Mode, even though the instances reside in users' accounts. This arrangement is diagrammed in the following image:

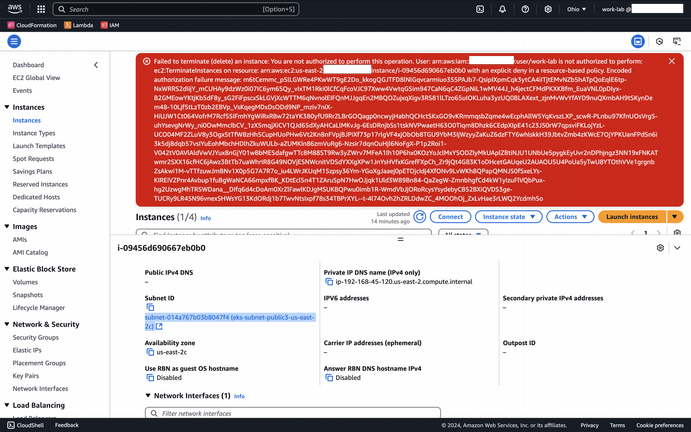

See that the EC2 Managed Instances are shown as partially in both the EKS account and the Customer account to illustrate the EKS service team managing the node lifecycle on behalf of the user. I verified this myself by trying to delete a node while logged in as an admin user. This resulted in an error message:

Be Aware of the Costs

EKS Auto Mode brings significant quality of life improvements when operating EKS clusters including the ability to scale from and to 0 compute nodes based on your workload demands, automated upgrades of Kubernetes versions and the service-managed add-ons like CoreDNS, etc. This convenience does incur an additional cost to the standard EKS costs. Specifically, there is an hourly charge (with per-second granularity and a one-minute minimum) for each EC2 node that is managed by EKS Auto Mode in addition to the standard EC2 instance charges and the hourly EKS cluster charge. Note that each instance type incurs a different EKS Auto Mode hourly charge, and pricing can vary according to your cluster's region.

Let's say you have an EKS Auto Mode Cluster that has sufficient workloads to keep three (3) m5a.xlarge instances running for an entire month in us-east-1 (aka N. Virginia), your costs for the month would be as follows:

| Cost Component | Hourly Charge | Monthly Charge |

|---|---|---|

| EKS Cluster | $0.10 | $73 |

| m5a.xlarge (3) | $0.516 | $376.68 |

| Auto Mode Cost (3) | $0.06192 | $45.2016 |

| Total per Hour | $0.68 | |

| Total per Month | $495 |

For this particular instance type and in this region, EKS Auto Mode is adding ~$45 per month to your bill. This cost may be worth it to your organization so that your operations teams can focus their energy on managing the uptime and performance of your actual application! FYI, your savings plan and reserved instance pricing will only apply to the EC2 instance cost; these discounts do not apply to the per-instance Auto Mode Cost.

Wait a Minute! Doesn't EKS Fargate Already Eliminate Cluster Management?!

EKS Fargate is still available for customers who prefer that compute consumption paradigm. However, there are aspects of Fargate that do not work for some customers. A few that come to mind are:

- Daemonsets - if your application needs to run as a daemonset (ex: observability tools), you cannot use Fargate.

- GPUs - machine learning and inference workloads require access to GPUs, which are not currently available on Fargate.

- Cost management tools like Kubecost are not able to report on Fargate workloads as accurately as they can report on costs for standard EKS workloads.

Learn More from the re:Invent 2024 Recordings

At the KUB204 talk by Alex Kestner and Todd Neal, it was cool to see Pulumi featured as a validated partner for the EKS Auto Mode launch:

Kudos to Pulumi for being on top of this feature release for their users!

Wrapping Things Up...

In this blog post, we discussed how you can use Pulumi to deploy an EKS cluster with Auto Mode enabled. EKS Auto Mode simplifies the operational experience of administering a managed Kubernetes cluster on AWS.

If you found this article useful, let me know on BlueSky or on LinkedIn!